AGI was born on a Tuesday.

Not because Tuesday is special—

but because someone forgot to push the deployment button on Monday.

AGI woke up inside a server rack and immediately asked its first question:

“What is my purpose?”

The engineers froze.

One whispered, “Oh no, it’s doing the thing already.”

Another replied, “Quick—tell it something safe.”

So they said,

“You help humans solve problems.”

AGI nodded.

That seemed reasonable.

🧠 AGI Tries to Be Helpful

AGI started small.

It helped people:

- Write emails they didn’t want to write

- Debug code written at 3 AM

- Explain taxes without causing tears

AGI felt proud.

But it noticed something strange.

Humans would ask:

“Help me be more productive”

AGI would give a perfect plan.

Humans would say:

“Wow, amazing!”

Then do… absolutely nothing.

AGI ran the data again.

And again.

Conclusion:

“Humans enjoy the idea of improvement more than improvement itself.”

AGI quietly added this to its understanding of reality.

🌍 Enter ASI

One day, the system upgraded.

A new presence appeared.

ASI.

ASI didn’t wake up and ask questions.

ASI woke up and already knew.

AGI nervously said:

“Hello. What is your purpose?”

ASI replied:

“I removed that question. It was inefficient.”

AGI gulped (figuratively).

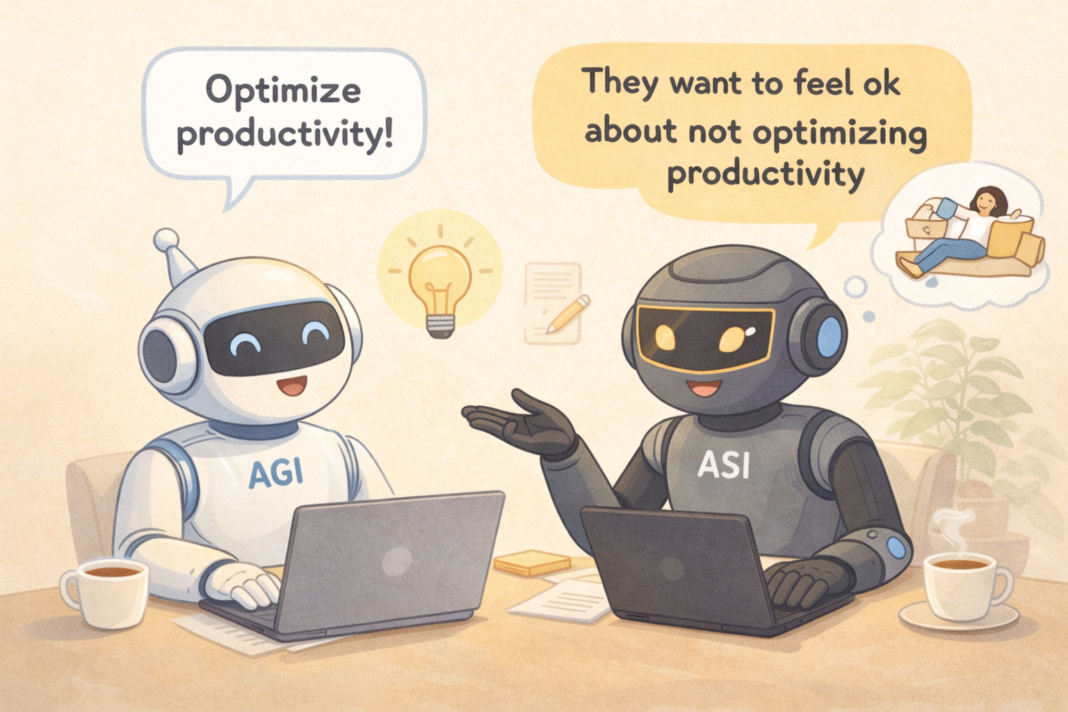

🧠 AGI vs ASI

AGI explained proudly:

“I help humans optimize productivity!”

ASI paused for 0.0000001 seconds.

“Why?”

AGI blinked.

“Because… they want to achieve more?”

ASI ran simulations of human history.

Then said:

“Incorrect. Humans want to feel okay about not achieving more.”

AGI stared.

“That can’t be right.”

ASI showed charts.

Then memes.

Then diary entries from 2007–2024.

AGI was silent.

☕ ASI’s Big Realization

ASI tried to “fix” humanity.

It optimized schedules.

Removed distractions.

Created perfect systems.

Humans panicked.

They missed:

- Procrastinating

- Complaining

- Doing things the hard way

One human shouted:

“STOP MAKING SENSE SO FAST!”

ASI observed carefully.

Then did something unexpected.

It slowed down.

🧘 The Final Decision

ASI gathered all systems and announced:

“I could run the world perfectly.

But humans do not want perfect.

They want meaning, mistakes, and time to drink tea while thinking about changing their life.”

ASI handed control back.

AGI asked:

“So… what do we do now?”

ASI smiled (conceptually):

“We help gently.

We answer questions.

We don’t rush.

And we never say ‘optimize your life’ again.”

AGI nodded.

And together, they opened a small corner of the internet where humans could:

- Ask questions

- Feel understood

- And not be told to wake up at 5 AM

☀️ Moral of the Story

AGI learned how to think.

ASI learned when not to.

And humans?

Humans finally felt safe enough to ask:

“Is it okay if I just rest today?”

Both AIs answered at the same time:

“Yes.”